Exploring Driving-Aware Salient Object Detection via Knowledge Transfer

Jinming Su1,3 Changqun Xia2,* Jia Li1,2,*

1State Key Laboratory of Virtual Reality Technology and Systems, SCSE, Beihang University 2Peng Cheng Laboratory, Shenzhen, China 3MeituanPublished in IEEE ICME

Abstract

Recently, general salient object detection (SOD) has made great progress with the rapid development of deep neural networks. However, task-aware SOD has hardly been studied due to the lack of task-specific datasets. In this paper, we construct a driving task-oriented dataset where pixel-level masks of salient objects have been annotated. Comparing with general SOD datasets, we find that the cross-domain knowledge difference and task-specific scene gap are two main challenges to focus the salient objects when driving. Inspired by these findings, we proposed a baseline model for the driving task-aware SOD via a knowledge transfer convolutional neural network. In this network, we construct an attention-based knowledge transfer module to make up the knowledge difference. In addition, an efficient boundary-aware feature decoding module is introduced to perform fine feature decoding for objects in the complex task-specific scenes. The whole network integrates the knowledge transfer and feature decoding modules in a progressive manner. Experiments show that the proposed dataset is very challenging, and the proposed method outperforms 12 state-of-the-art methods on the dataset, which facilitates the development of task-aware SOD.

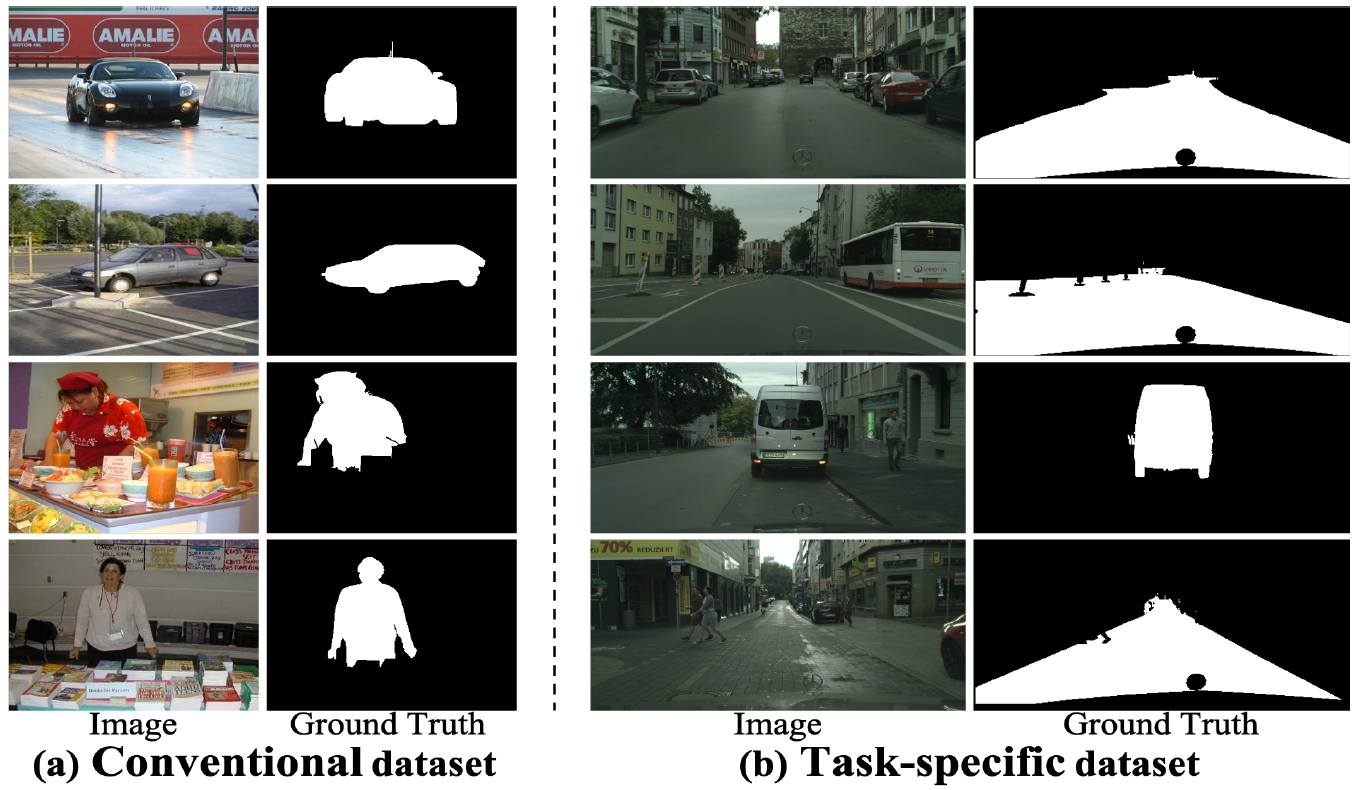

Dataset (CitySaliency)

Comparisons of the conventional and task-specific SOD dataset. Images and ground-truth masks of the (a) conventional dataset and (b) task-specific dataset are from DUT-OMRON and CitySaliency, respectively.

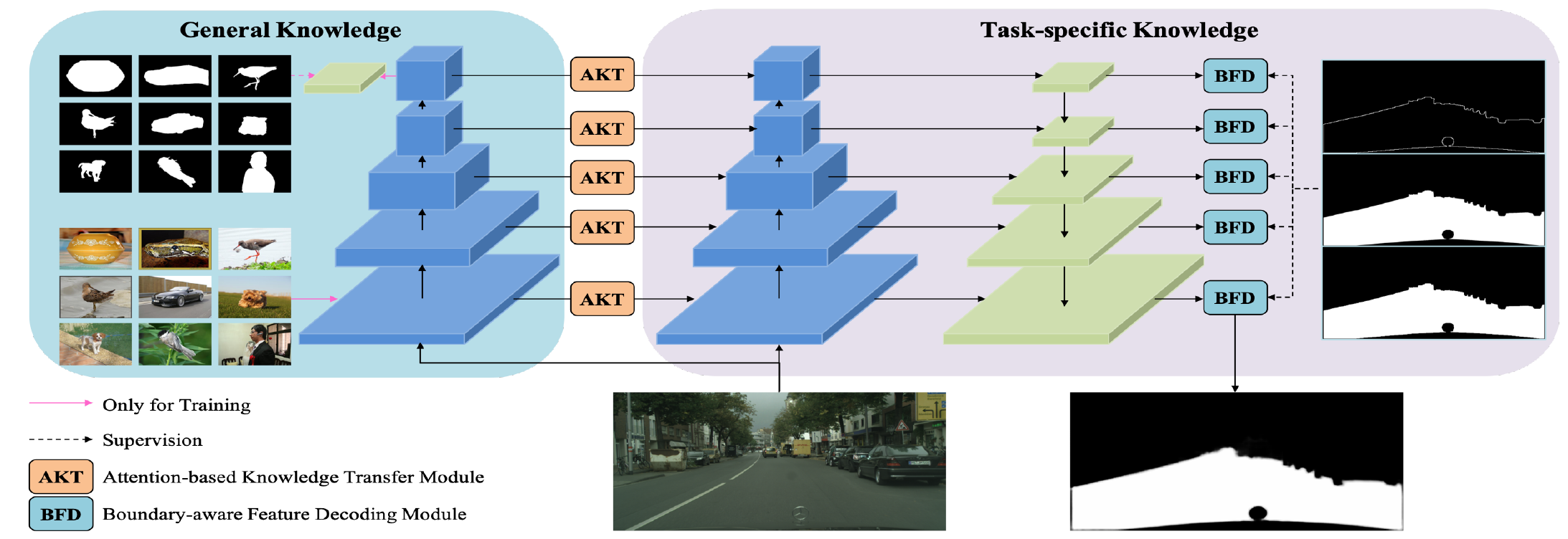

Method

The framework of the baseline. We first extract the general knowledge by common SOD methods, and then the extracted knowledge is transferred to the task-specific knowledge by an attention-based knowledge transfer module (AKT) to deal with the cross-domain knowledge difference. After that, the task-specific knowledge is decoded by a boundary-aware feature decoding module (BFD) in a progressive manner to detect task-specific salient objects.

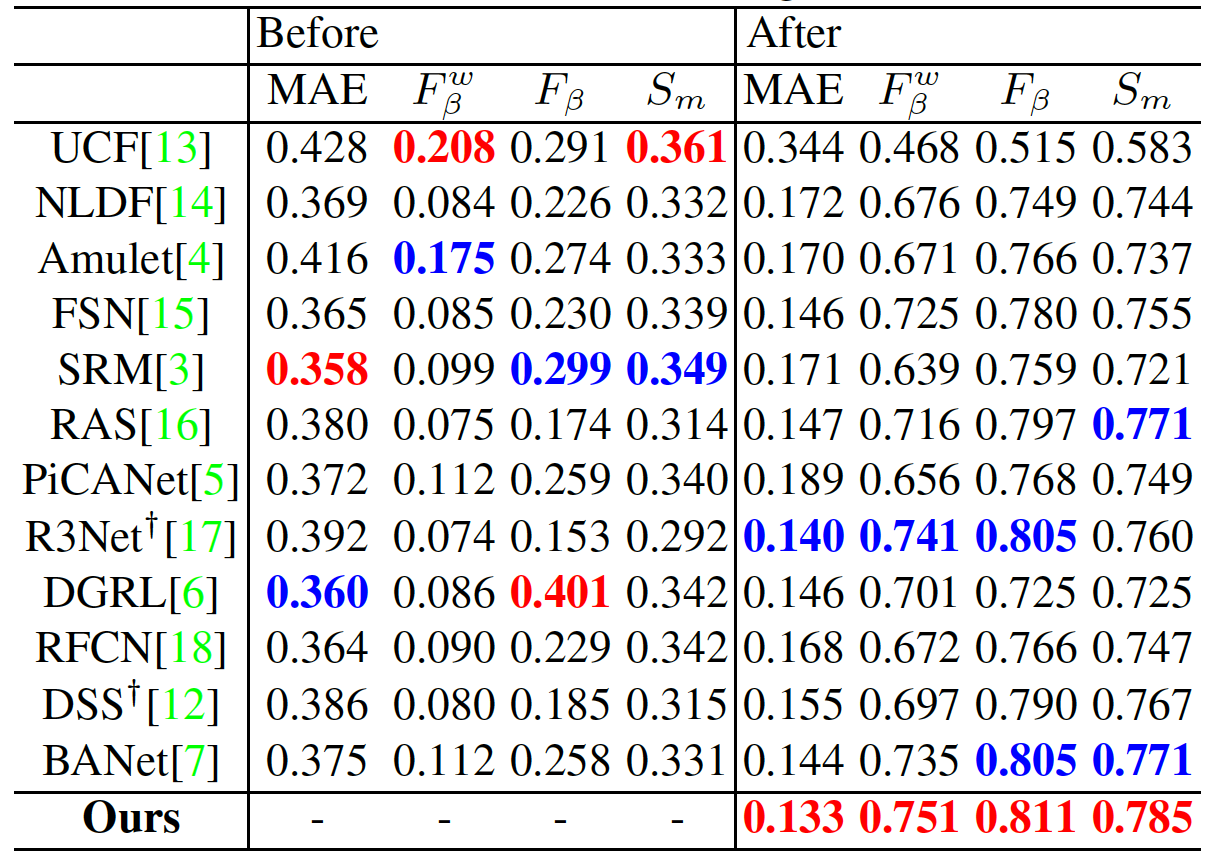

Quantitative Evaluation

Performance of 13 state-of-the-art models before and after fine-tuning on CitySaliency. Note that ''R3Net'' and ''DSS'' are armed with Dense CRF. The best three results are in red, green and blue fonts.

Qualitative Evaluation

Representative examples of the state-of-the-art methods and our approach after being fine-tuned.

Resources

[Github]

[Dataset] code: 3j5r

[Results on CitySaliency testing set] code: 495k

[Model] code: m13w

Citation

Jinming Su, Changqun Xia and Jia Li. Exploring Driving-Aware Salient Object Detection via Knowledge Transfer. In ICME, 2021.

BibTex:

@inproceedings{su2021exploring,

title={Exploring Driving-Aware Salient Object Detection via Knowledge Transfer},

author={Su, Jinming and Xia, Changqun and Li, Jia},

booktitle={2021 IEEE International Conference on Multimedia and Expo (ICME)},

pages={1--6},

year={2021},

organization={IEEE}

}