Distortion-adaptive Salient Object Detection in 360° Omnidirectional Images

Jia Li1,3 Jinming Su1 Changqun Xia3,* Yonghong Tian2,3,*

1State Key Laboratory of Virtual Reality Technology and Systems, SCSE, Beihang University 2National Engineering Laboratory for Video Technology, School of EE&CS, Peking University 3Peng Cheng Laboratory, Shenzhen, ChinaPublished in IEEE JSTSP

Abstract

Image-based salient object detection (SOD) has been extensively explored in the past decades. However, SOD on 360° omnidirectional images is less studied owing to the lack of datasets with pixel-level annotations. Toward this end, this paper proposes a 360° image-based SOD dataset that contains 500 high-resolution equirectangular images. We collect the representative equirectangular images from five mainstream 360° video datasets and manually annotate all objects and regions over these images with precise masks with a free-viewpoint way. To the best of our knowledge, it is the first public available dataset for salient object detection on 360° scenes. By observing this dataset, we find that distortion from projection, large-scale complex scene and small salient objects are the most prominent characteristics. Inspired by the founding, this paper proposes a baseline model for SOD on equirectangular images. In the proposed approach, we construct a distortion-adaptive module to deal with the distortion caused by the equirectangular projection. In addition, a multi-scale contextual integration block is introduced to perceive and distinguish the rich scenes and objects in omnidirectional scenes. The whole network is organized in a progressively manner with deep supervision. Experimental results show the proposed baseline approach outperforms the top-performanced state-of-the-art methods on 360° SOD dataset. Moreover, benchmarking results of the proposed baseline approach and other methods on 360° SOD dataset show the proposed dataset is very challenging, which also validate the usefulness of the proposed dataset and approach to boost the development of SOD on 360° omnidirectional scenes.

Dataset (360-SOD)

Representative examples of 360-SOD. Images and ground truth are shown as equirectangular images.

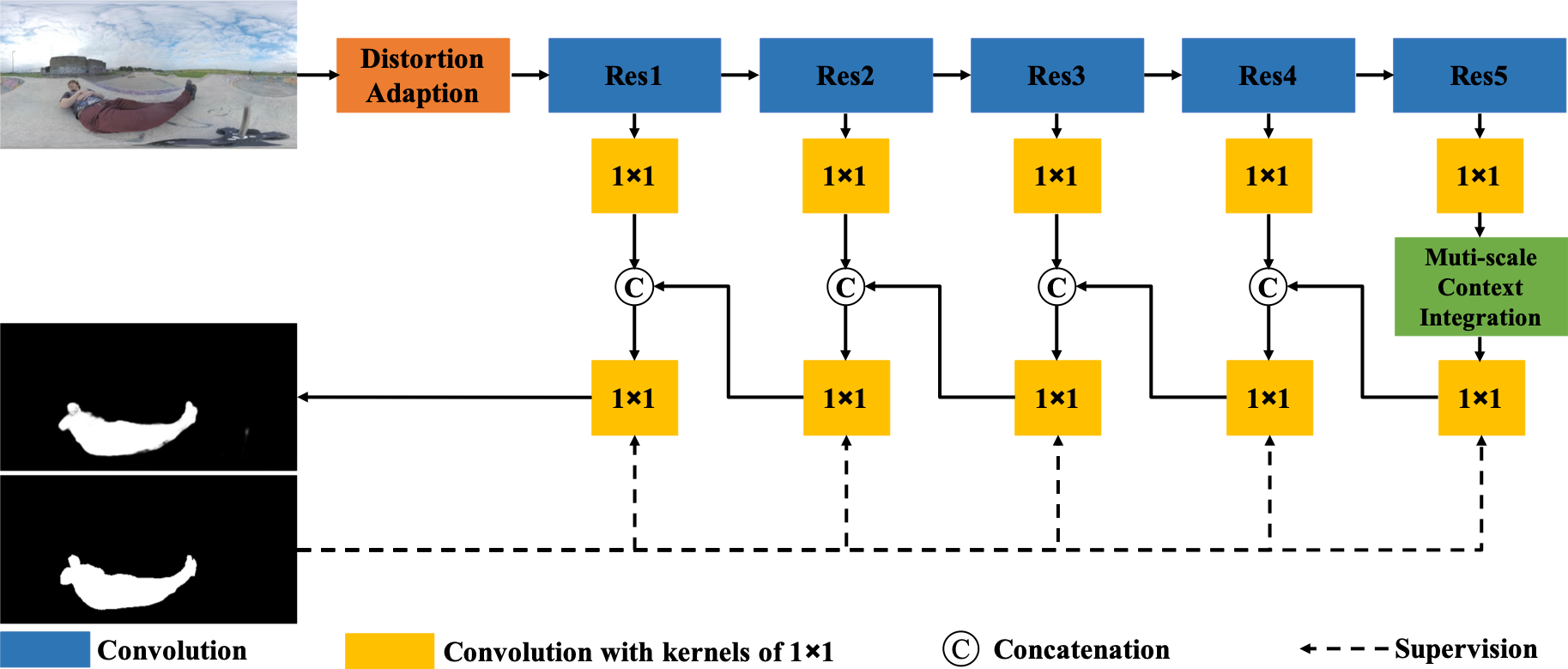

Method

The framework of our baseline model. The equirectangular image is processed by a distortion-adaptive module, and the output is transferred to ResNet-50 to extract features in different levels. The highest-level features are dealt with by a multi-scale context integration module to integrate information. Moreover, the coarser-level saliency features are concatenated into the adjacent finer-level features to get finer saliency maps and the whole network is organized in a progressive form.

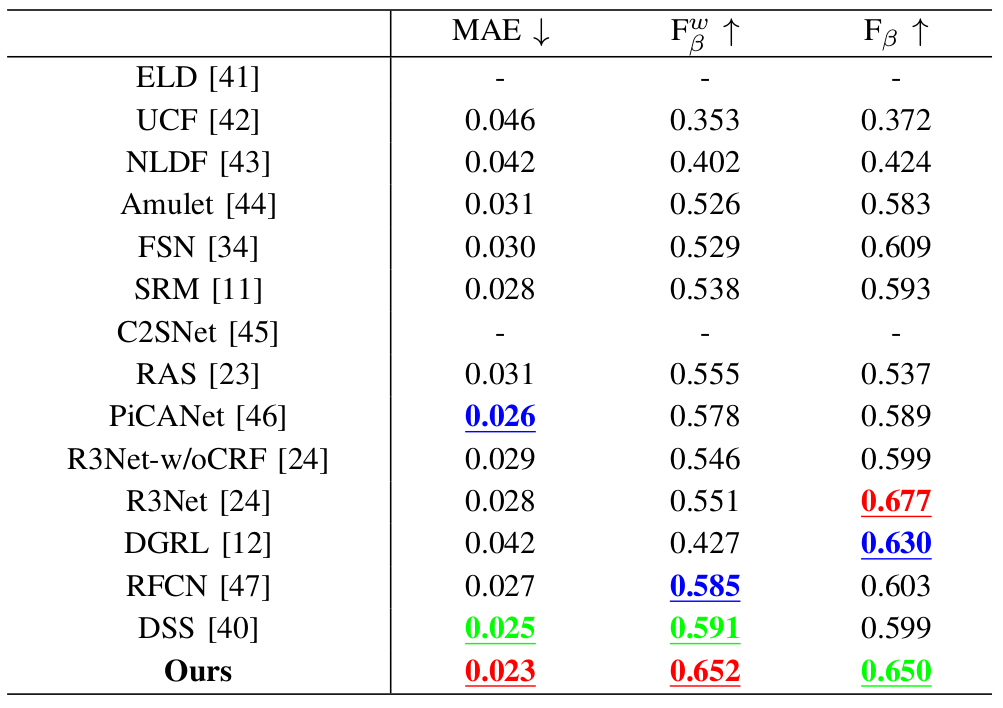

Quantitative Evaluation

Performance of the state-of-the-arts and the proposed method after being fine-tuned on 360-SOD dataset. Note that ''R3Net-w/oCRF'' means R3Net without Dense CRF and "-" means the results cannot be obtained. The best three results are in red, green and blue fonts.

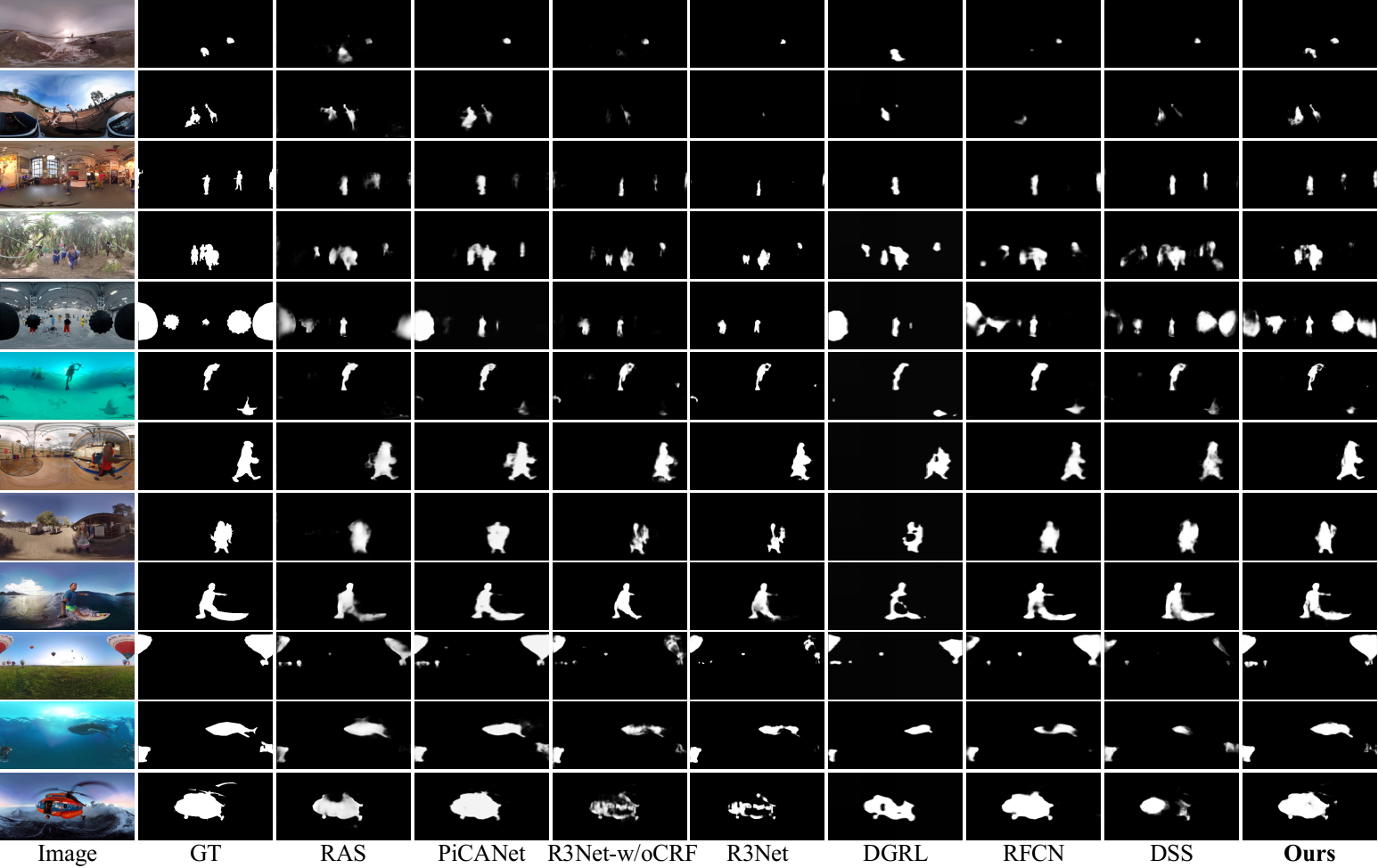

Qualitative Evaluation

Representative examples of the state-of-the-art algorithms after being fine-tuned on 360-SOD. GT means ground-truth masks of salient objects.

Citation

Jia Li, Jinming Su, Changqun Xia and Yonghong Tian. Distortion-adaptive Salient Object Detection in 360° Omnidirectional Images. In IEEE JSTSP, 2020.

paper: [PDF]

Google Drive:

[Project Resources]

Baidu Drive:

[Dataset. 89MB] code: gcul

[Results on 360-SOD testing set. 1MB] code: fpbf

[Code & Model. 115MB] code: u2ct

BibTex:

@article{li2019distortion,

title={Distortion-adaptive Salient Object Detection in 360° Omnidirectional Images},

author={Li, Jia and Su, Jinming and Xia, Changqun and Tian, Yonghong},

journal={IEEE Journal of Selected Topics in Signal Processing (JSTSP)},

publisher={IEEE},

year={2020},

volume={14},

number={1},

pages={38-48}

}